Apache Kafka

Apache Kafka Tutorial for Beginners

- Last updated Apr 25, 2024

Apache Kafka is a data streaming platform developed by Apache Software Foundation. It is written in Java and Scala. The development of Apache Kafka was originally started at LinkedIn and became open-source in 2011.

Apache Kafka is used to capture data in the form of streams in real-time from various event sources such as mobile devices, sensors, software applications, cloud services, and databases. The event data are stored durably for later retrieval and processing to generate quick interpretation of data in real-time.

Apache Kafka is a publish-subscribe, distributed, highly scalable, fault-tolerant messaging system.

What is a Messaging System?

A messaging system is a system that is responsible for transferring data from one application to another by focusing only on data and not worrying about how the data is transferred and shared. In a messaging system, messages are queued asynchrously between client applications and messaging system since distributed messaging are based on the concept of reliable message queuing.

There are two types of messaging patterns:

- Point-to-Point: In a point-to-point messaging system, all messages are persisted in a queue and can be consumed by any consumers. However, a particular message can be consumed to a maximum limit of one time only. A message disappears after it is consumed once by a consumer.

- Publish-Subscribe (pub-sub): In a publish-subscribe messaging system, all messages are persisted in topics. A topic is a virtual data storage mechanism in which records are stored and published. A consumer can subscribe to any number of topics and may consumed all messages from those topics without being worried about messages getting disappeared. In a publish-subscribe messaging system, publishers are producers of messages and subscribers are consumers.

Apache Kafka Core Capabilities

Apache Kafka was designed to provide a very powerful platform that is capable of handling real-time data feeds. Some of the core capabilities of Apache Kafka are:

- Scalable: Apache Kafka is a highly scalable messaging system. Unlike in other messaging system, Kafka clusters can be scaled up to a thousand brokers, hundred and thousands of partitions with the capacity of handling trillion of messages per day and storing capacity of petabytes of data.

- High-Throughput: The read/write throughput of Kafka is very high as compared to other messaging systems.

- Low-Latency: Apache Kafka end to end data transfer latency is as low as 2ms.

- Permanent Storage: Apache Kafka is capable of storing streams of data in a fault-tolerable, durable, and distributed cluster.

- High Availability: Kafka is a distributed system, the same model can be deployed across different geographic regions.

Where can you deploy Kafka?

Kafka can be deployed on any virtual machines, containers, cloud environments, and bare-metal hardware.

Servers and Clients in Kafka

Kafka consists of servers and clients that communicates using a high-performance binary TCP protocol:

Servers: Kafka is run as a cluster of one or more servers. Some of these servers form the storage layer, called the brokers and other servers run Kafka connect. Kafka Connect is a tool which is responsible for streaming data between Apache Kafka and your system such as relational databases and other Kafka clusters. Kafka clusters are highly fault-tolerant that is if one server fails, the other server will take over their work to keep the system running without any data loss.

Clients: Clients are used for writing distributed applications and microservices to read, write, and process streams of events in a fault-tolerant manner. Clients are available for Java and Scala including libraries for C/C++, Python, Go and many other programming languages as well as REST APIs.

Kafka Basic Terminology

Event: An event is something important that happens or takes place around you or your business. Records or messages are written to Kafka in the form of events. An event has a key, value, timestamp, and other metadata headers. For example:

- Event key : "Jake"

- Event value : "Made a payment of $1600 at college"

- Event timestamp : "August 14, 2020 at 10:11 am"

Producers: Producers are client applications that publish (write) events to Kafka.

Consumers: Consumers are applications that subscribe to read and process the events.

Topics: Topics are categories to where events are organized and durably stored. A topic is similar to a folder in a file system and events are files in that folder. A topic can have zero or multiple producers and consumers. Multiple producers can publish or write events to a topic. Similarly, multiple consumers can subscribe to read and process events from a topic. Events in topic are not deleted after consumption and therefore events can be read as many times as needed. You can configure for how long Kafka should retain events through per topic configuration settings. So, it is fine to store data in topics for any longer period of time as the size of data does not affect Kafka's performance.

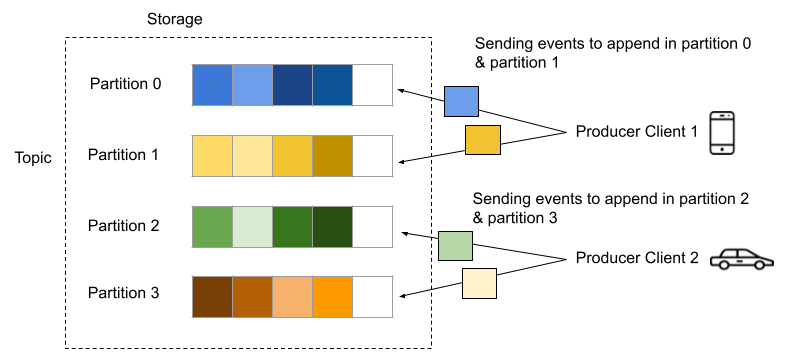

A topic is divided into single or multiple partitions. Each Partition is an immutable sequence of messages. Messages within the partitions are appended in an ordered form. Each message is assigned a unique sequential id called offset that identifies a message with the partition.

Data in Kafka is distributed across different brokers. This is important for scalability as it allows client applications to read and write event data to/from many brokers simultaneously. Event data with the same event key is always appended in the same partition of a topic.

The topic in the example has four partitions. Two different producer clients are publishing events data to the topic independently. Event data with the same event key are written to the same partition of the topic.

Every topic can be replicated across geo-regions or datacenters in order to make the data highly-available and fault-tolerant. The replication makes your data available in multiple brokers. Data are usually replicated at the level of topic partitions. The replication factor of production setting is usually 3, meaning there will be three copies of your data.