Setup and Run Apache Kafka on Ubuntu, Linux, or Mac OS

To get started with Apache Kafka on Ubuntu, Linux, or Mac OS, complete the instructions given in this quick start tutorial.

Note: Your computer must have Java 8+ installed.

Follow these steps to setup and run Apache Kafka on a local machine:

Downloading Apache Kafka

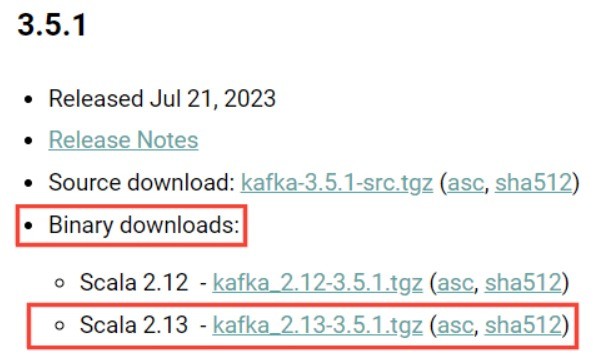

Go to Apache Kafka official download page at https://kafka.apache.org/downloads and download the latest stable binary version.

Extracting the Downloaded file

After the download is complete, extract the contents of the tar file and navigate to the extracted Kafka folder using commands:

tar -xzf kafka_2.13-3.5.1.tgz cd kafka_2.13-3.5.1

Starting Zookeeper and Apache Kafka:

Now, open a terminal and navigate to '\bin' directory within the extracted Kafka folder by using the command 'cd kafka_2.13-3.5.1\bin'. Start Zookeeper by executing the 'zookeeper-server-start.sh' script with 'config\zookeeper.properties' file using the command:

bin/zookeeper-server-start.sh config/zookeeper.properties

You need to make sure that Zookeeper started successfully.

Next, open a new terminal and navigate to '\bin' directory within the extracted Kafka folder by using the command 'cd kafka_2.13-3.5.1\bin'. Start Apache Kafka by executing the 'kafka-server-start.sh' script with 'config\server.properties' file using the command:

bin/kafka-server-start.sh config/server.properties

You also need to make sure that Apache Kafka started successfully.

Creating a Topic

A topic is needed to store events. A topic in Kafka, is like a table in a database where data are stored. You can have multiple topics for different events.

To create a new topic, use the kafka-topics.sh tool from the command line. Replace 'my-topic-name' with the name of your topic:

bin/kafka-topics.sh --create --topic my-topic-name --partitions 5 --replication-factor 1 --bootstrap-server localhost:9092

You can also view details of the new topic by running the following command:

bin/kafka-topics.sh --describe --topic my-topic-name --bootstrap-server localhost:9092

Writing Events to the Topic

A Kafka client communicates with the Kafka broker to write events. After the events are written, the broker will store the events for as long as you want.

There are Kafka clients libraries, supported for different languages, using which we can send events to Kafka topics. An application that sends data to the Kafka topic is called a producer application.

You can also use the kafka-console-producer.sh tool to start console Producer for writing events to the topic. By default, each entered line is treated as a separated event. For example:

$ bin/kafka-console-producer.sh --topic my-topic-name --bootstrap-server localhost:9092 >This is my first event >This is my second event >This is my third event

The console Producer can be stopped by pressing Crtl+C at any time.

Reading Events from the Topic

A Kafka client communicates with the Kafka broker to read events.

You can use Kafka clients libraries in your application to read events from Kafka topics. An application that reads data from the Kafka topics is called a consumer application.

You can also use console Consumer client to read events that you created. Use the following command to read events:

bin/kafka-console-consumer.sh --topic my-topic-name --from-beginning --bootstrap-server localhost:9092

You can stop the Consumer client by pressing Ctrl+C at any time.

Stopping the Kafka Services

First, stop the Kafka console Producer and Consumer clients.

Next, stop the Kafka broker by pressing Ctrl+C.

Lastly, stop the Kafka ZooKeeper by pressing Ctrl+C.

Import/Export Data as Streams of Events into Kafka

Sometimes, you may need to collect data from any existing relational databases or messaging system into Kafka. To achieve this, you can use Kafka Connect. Kafka connect is a tool that helps to stream data reliably and durably between Kafka and other external systems. Kafka Connect can collect data continuously from any external systems into Kafka and vice versa.